- #Loading a data set in collaboratory how to

- #Loading a data set in collaboratory software

- #Loading a data set in collaboratory code

#Loading a data set in collaboratory code

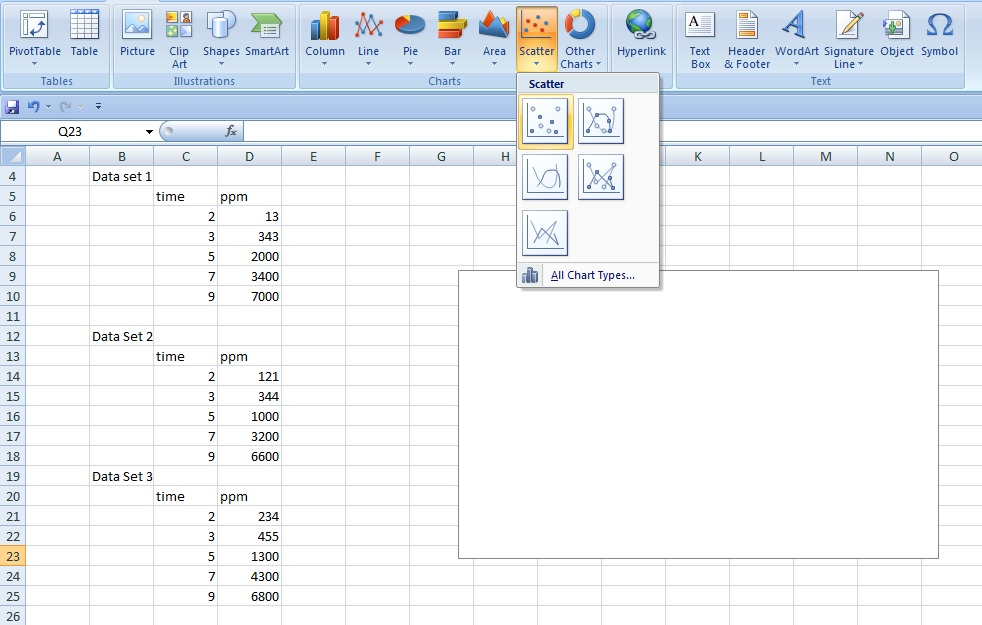

Now let us look at what this function does to text using the code on the first 5 comments in the corpus. A function named ‘pre_process’ was created to implement all these steps in a single line to any text or block of text. This step is commonly known as normalization. This involved removing whitespaces, tabs, punctuations and finally converting all text in lowercase. download ('porter_test' )Īnother pre-processing step was conducted using the Regular Expressions or ‘re’ module. I have imported the ‘PorterStemmer’ and ‘Stopwords’ from NLTK using the following commands. A list of common stopwords can be found HERE. are frequently ignored by search engines. Words such as ‘after’, ‘few’, ‘right’ etc. Stopwords are words that do not add much meaning to a sentence from a feature extraction point of view. Martin Porter’s algorithm is a popular stemming tool, which can be found in NLTK. For example, the word ‘fish’ is a root for words such as ‘fishing’, ‘fished’, and ‘fisher’. Stemming involves reducing a derived word to its base form. Though there are several different methods to classify, the one I have used involve the NLTK python package. Once this operation is complete, columns are given name references – First, we load the data onto the colab environment with the following code -ĭf = pd.read_csv(io.StringIO(code('utf-8')),header=None)Įxecuting this block (known as cell in colab) generates an upload widget, by which the training data needs to be uploaded. Loading the corpus: The training data consists of two columns, the first containing comments and the second, the labels (0 and 1). Given below is a simple classifier which can generate labels with a high level of accuracy given sufficient training data and balanced label distribution. Reading comments take up a considerable amount of time, and given previous labelling information, the junk comments can be easily labelled by text classification algorithms.

As open text fields are difficult to control, customers are free to post messages which may not be actionable, or sometimes even understandable.

#Loading a data set in collaboratory how to

When the information regarding a mis-shipment is received in the system, a team of experts read the comments generated by the customer against every case, to determine how to investigate it. The Context: I work for an e-commerce organization, where mis-shipments are ubiquitous in the business. This is a truly great step in making AI and data accessible to all.

#Loading a data set in collaboratory software

It gives several benefits of Jupyter, free GPU time, easy code sharing and storage, no requirement of software installation, coding using a chrome browser and compatibility with the Python language and access to modules such as scikit-learn. The Platform: Google has scored another hit with CoLaboratory, its in-house data science platform that is freely available for anyone to use.

0 kommentar(er)

0 kommentar(er)